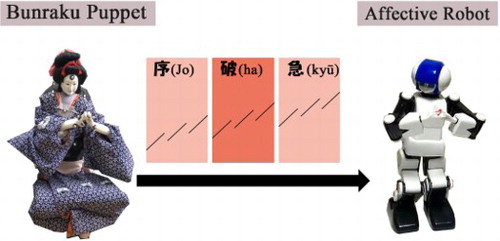

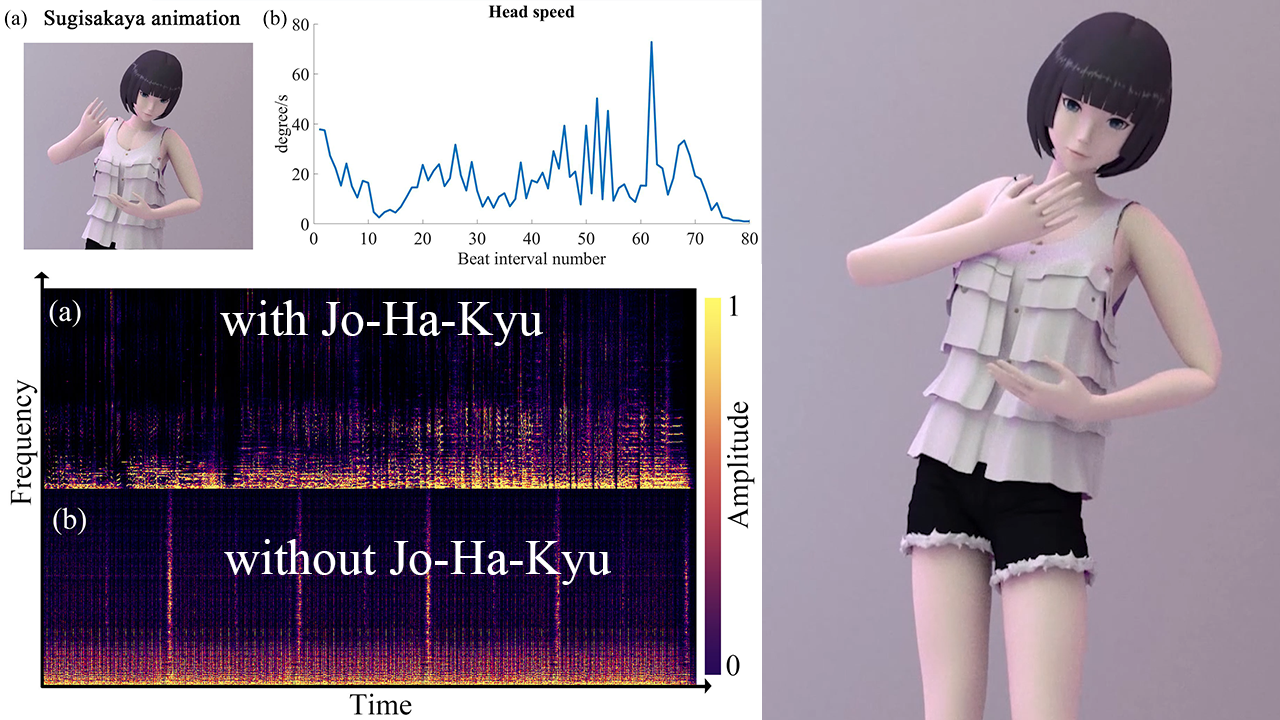

Investigating the Effect of Jo-Ha-Kyū on Music Tempos and Kinematics across Cultures: Animation Design for 3D Characters Using Japanese Bunraku Theater

Ran Dong, Dongsheng Cai, Shingo Hayano, Shinobu Nakagawa, Soichiro Ikuno Leonardo, 55(5), 468-474, 2022

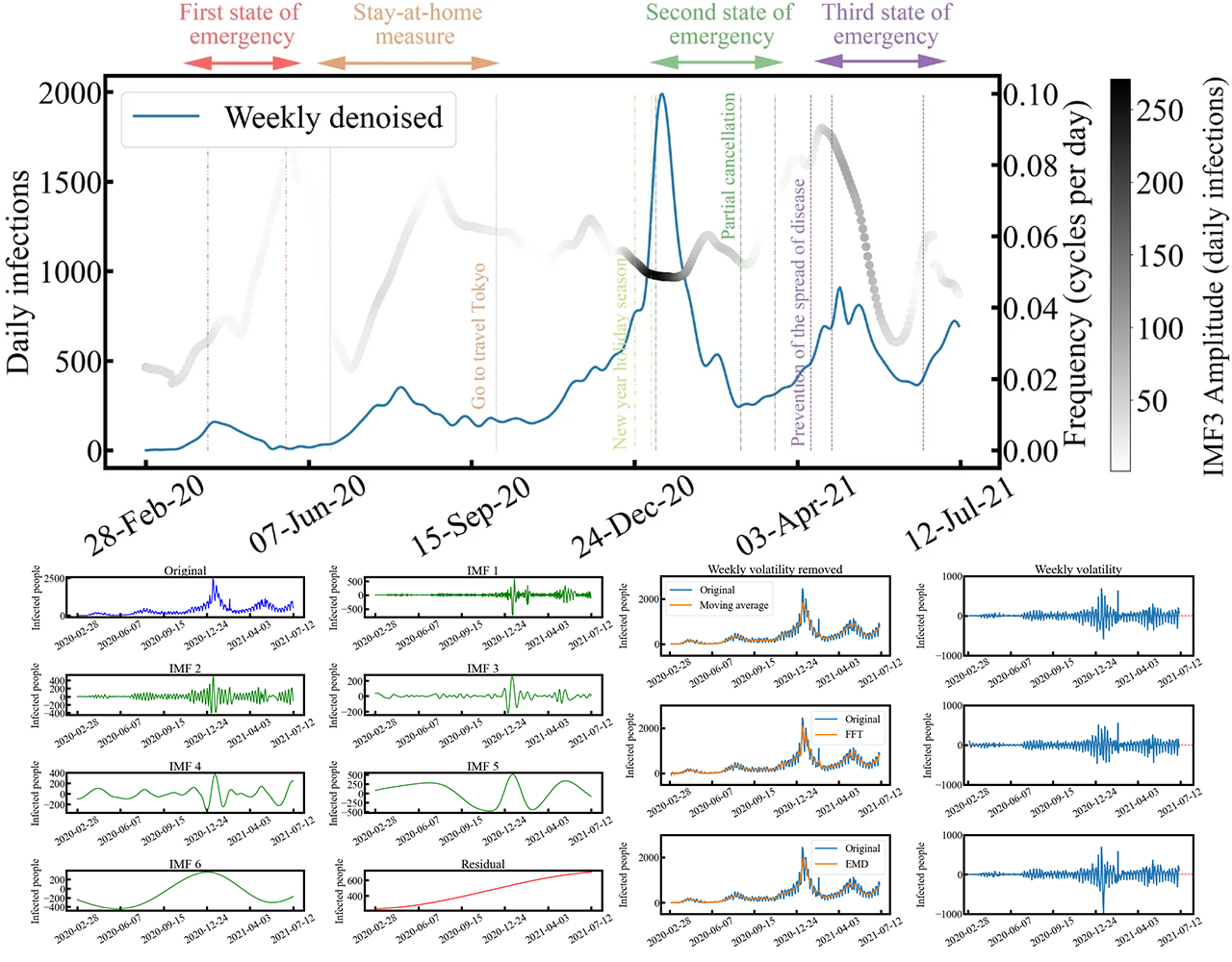

Nonlinear frequency analysis of COVID-19 spread in Tokyo using empirical mode decomposition

Ran Dong, Shaowen Ni, Soichiro Ikuno Scientific Reports, 12.1, 2022, 1-12

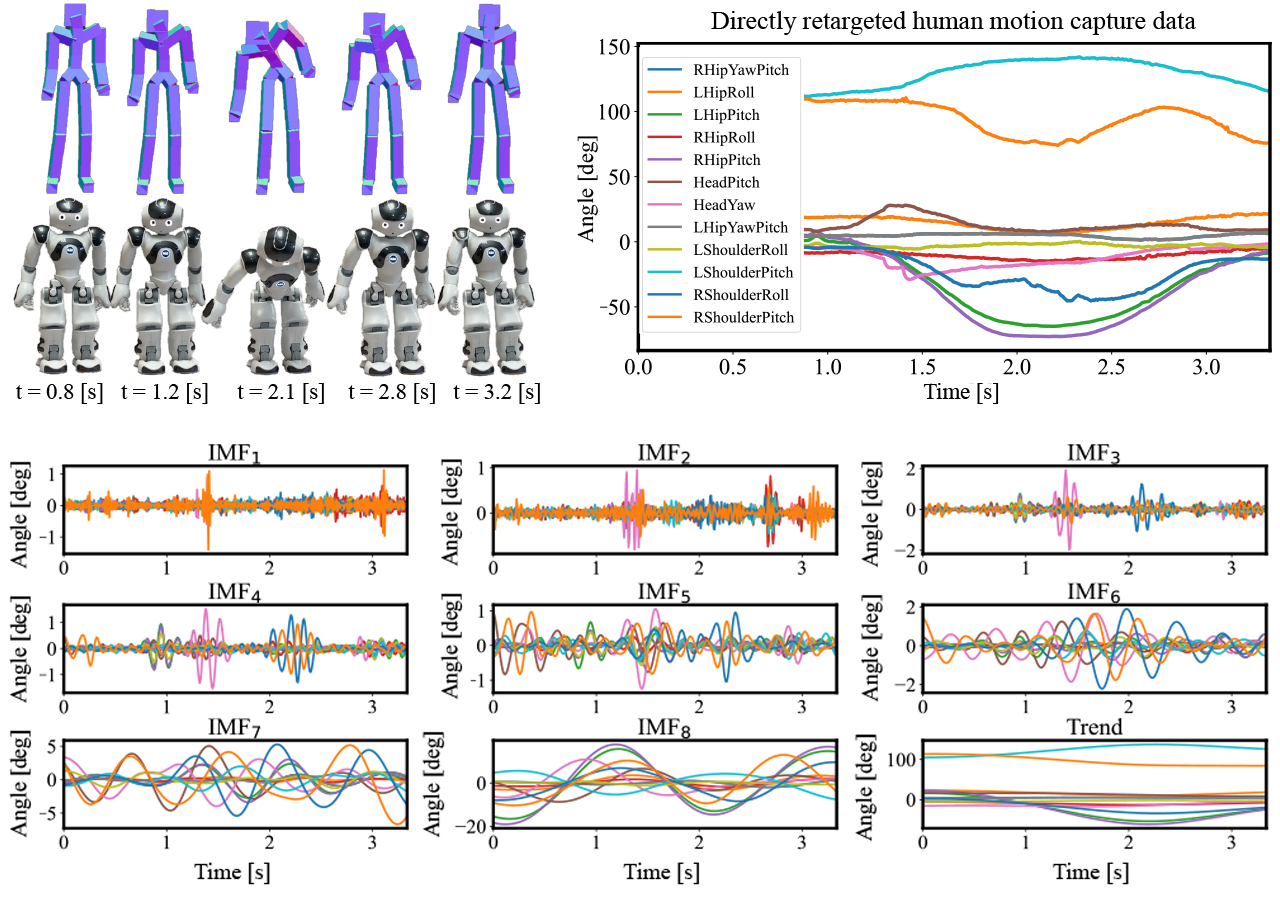

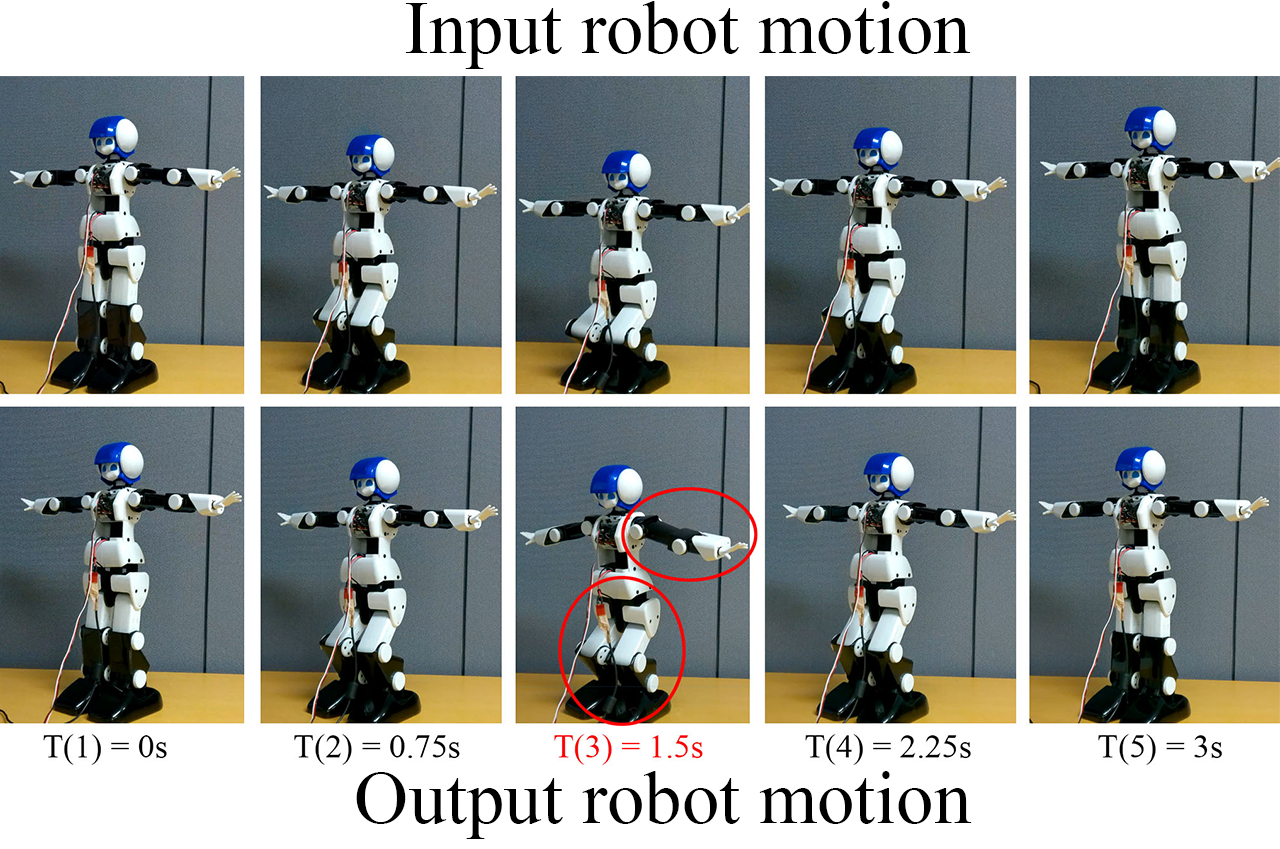

A deep learning framework for realistic robot motion generation

Ran Dong, Qiong Chang, Soichiro Ikuno Neural Computing and Applications, 35, 23343-23356, 2021

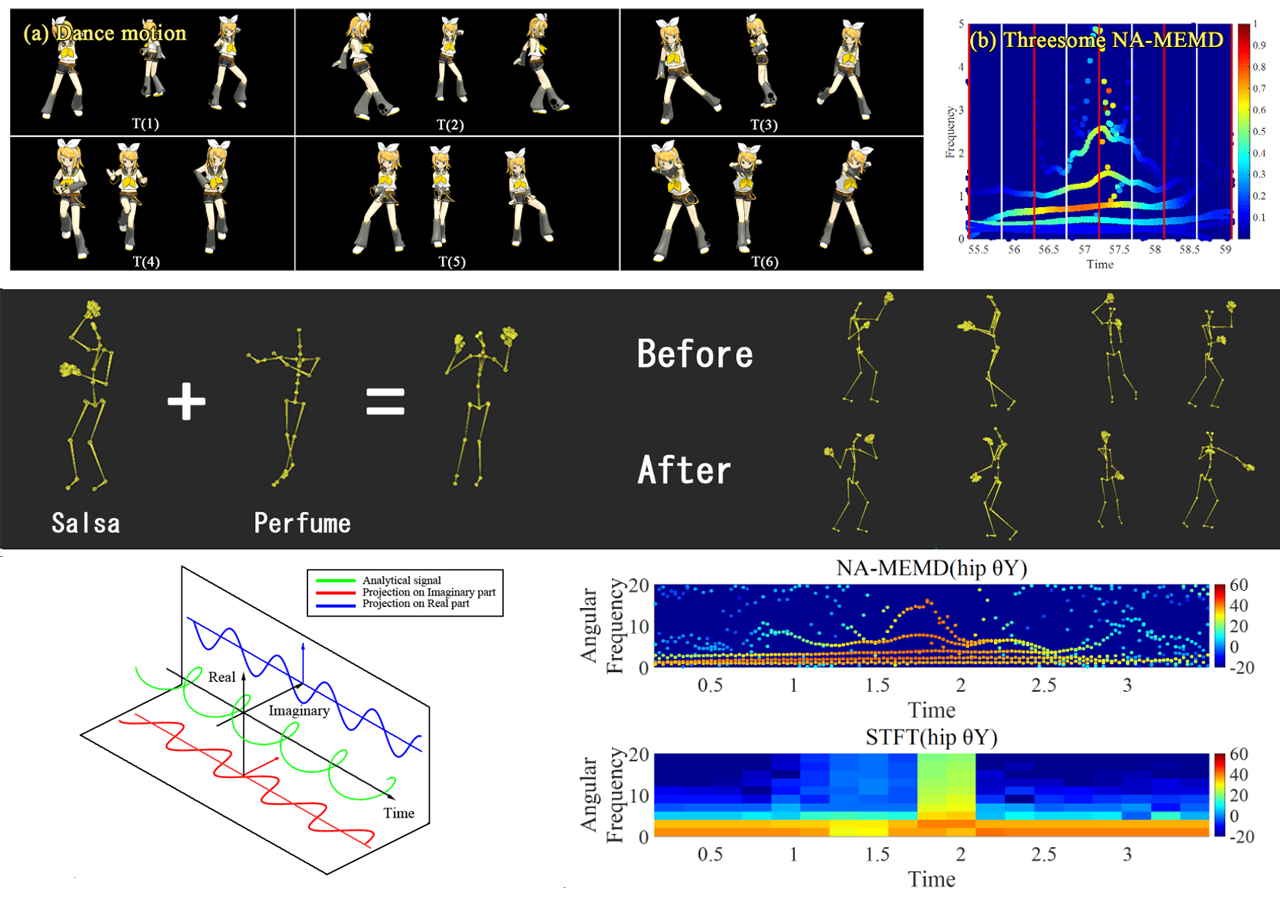

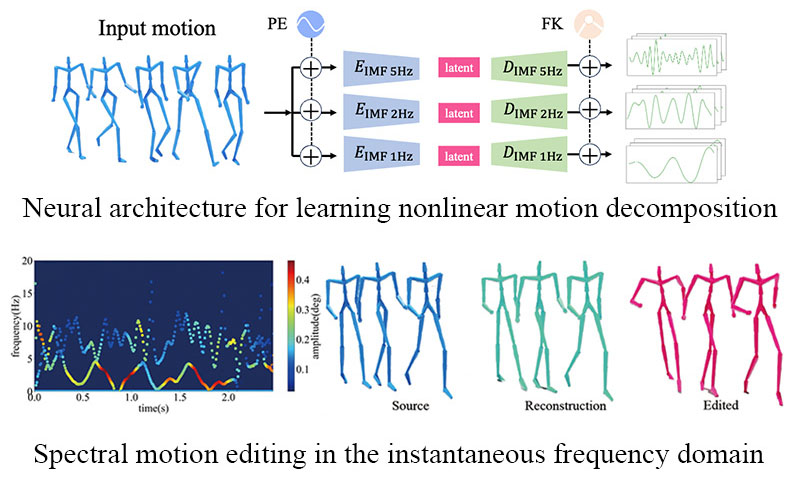

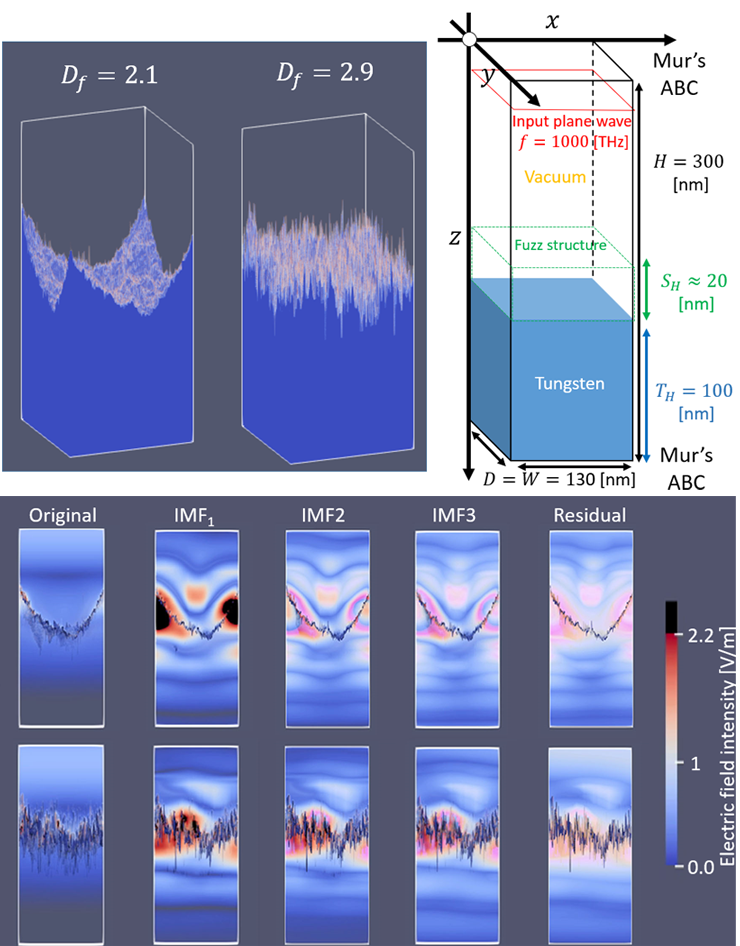

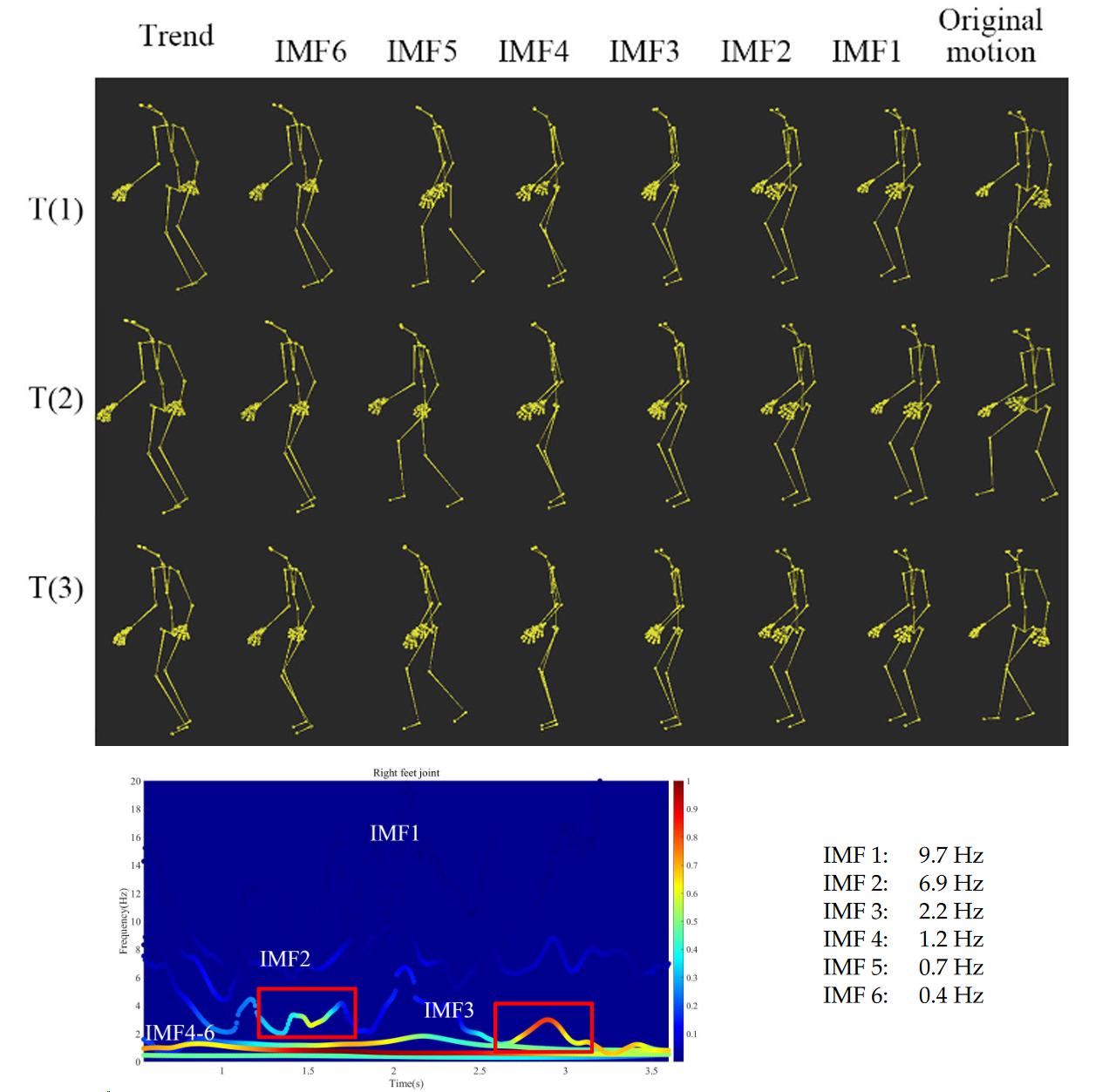

Motion Capture Data Analysis in the Instantaneous Frequency-Domain Using Hilbert-Huang Transform

Ran Dong, Dongsheng Cai, Soichiro Ikuno Sensors, 20, 6534, 2020